Abstract Style: Space-Based Architecture

Many developers strive for elegance efficiency in their code. Imagine a hypothetical developer who takes this too far. Perhaps this developer looks back in shame at the sloppy, inefficient implementations their much less experienced self had written. They were, undoubtedly and objectively, “bad.” Over time, their code grew cleaner and more efficient. They could measure progress in wall-clock time, algorithmic complexity, or other quality metrics. Somewhere, however, the pendulum swung too far. If their “bad” code was slow, and their “better” code is faster, it stands to reason that even faster is even better. They reached a point where they would squeeze out every spare microsecond at all costs. The result was difficult to read, change, and understand; perhaps “the juice was not worth the squeeze.”

Performance can be a valuable system capability, but only in context. Remember the “Mad Potter’s” wisdom on the difference between “a lot” and “enough.” Architecture trade-offs must be considered in many dimensional contexts. Never trade an excess of one capability for a deficit of a more important capability. That said, sometimes performance is among that short-list of business-critical capabilities–perhaps at the very top. What constraints induce high-performance at the architectural level? Read on to learn about the Space-Based Architecture.

This post is part of a series on Tailor-Made Software Architecture, a set of concepts, tools, models, and practices to improve fit and reduce uncertainty in the field of software architecture. Concepts are introduced sequentially and build upon one another. Think of this series as a serially published leanpub architecture book. If you find these ideas useful and want to dive deeper, join me for Next Level Software Architecture Training in Santa Clara, CA March 4-6th for an immersive, live, interactive, hands-on three-day software architecture masterclass.

Introducing the Space-based Architecture Pattern

This architecture takes its name from the concept of tuple space, an implementation of a shared memory space for parallel/distributed computing. It provides a repository of tuples that can be addressed and manipulated concurrently. In this architecture, potential performance bottlenecks are aggressively eliminated which results in a high performance and massively scalable architecture. The database is decoupled from the application by choosing to have each worker node store the entire dataset in memory in the form of replicated data grids and eliminating all direct reads/writes. Writes to the in-memory dataset are synced between worker nodes through some form of middleware which also asynchronously reads and writes to the underlying database. A separate component monitors overall load and scales the number of available workers up or down based on demand.

Although not easy (nor cheap) this abstract style forms the foundation of a highly performant, highly scalable, and highly elastic system architecture which offers a meaningful alternative to attempting to scale a database or adding in caching technologies to a less scalable architecture. Let’s explore what this blackboard/worker model looks like in practice.

The Processing Unit

The processing unit is the component of the system that contains the application logic and performs the business functions (whatever that may be). For practical reasons, the processing unit is generally a fairly fine-grained component with carefully-scoped data and optimal cold-start times. In other words, there may be multiple tuple-spaces depending on the size and scope of the overall system. In addition to the application logic, the processing unit contains the in-memory data grid and replication engine.

The Data Grid

The data grid is the replicated in-memory state of the processing units and is a central concern of this architecture. It is essential that each processing unit contains identical state at all times. The data grid may reside entirely within the processing unit with replication happening asynchronously between processing units but, in some implementations, an external controller is necessary in which case the controller element of the data grid would form part of the virtualized middleware layer.

The Virtualized Middleware Layer

This layer contains components that handle infrastructure concerns that might control some aspects of the data synchronization, request handling and may be off-the-shelf products or custom code.

The Message Grid

The messaging grid is essentially a load balancer in this architecture. The messaging grid manages requests and session state and will determine which processing units are available and distribute requests appropriately.

The Processing Grid

This is an optional component that handle request orchestration should multiple processing units be required to satisfy a given request.

The Deployment Manager

The deployment manager acts as the supervisor in this style. Observing load and capacity and either adding or removing processing units to/from the pool as required.

Data Pumps

Although, in theory, this architecture could indefinitely hold all critical data in volatile memory, we must plan for inevitable cold start/cold restart scenarios. Data Pumps provide eventual consistency between the in-memory datasets and the persistent storage with the database. Data pumps subscribe to the state changes asynchronously broadcast by processing units and then synchronously writes state changes to disk. A data reader is a separate pump responsible for providing initializing state to the processing units in the event of a cold start scenario.

Core Constraints

These capabilities are induced by a number of core constraints as follows:

- Fine-Grained Components

- High Operational Automation

- All Data stored in-memory

- Asynchronous State Replication and Database Writes

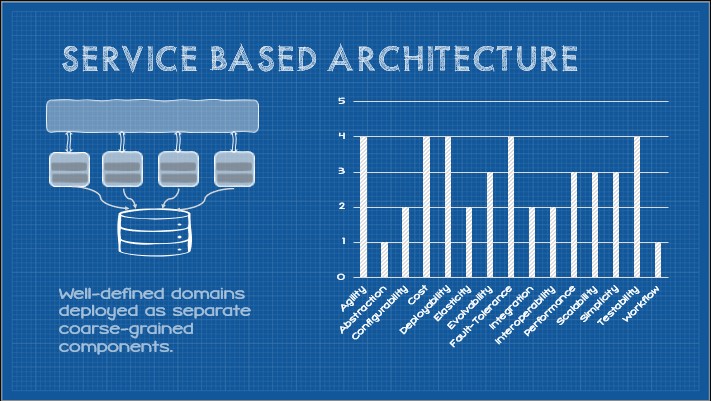

Constraint: Fine-Grained Components

This constraint states that the overall system is decomposed into fine-grained components. Precise granularity is not prescribed, but generally a single fine-grained component will be scoped to a single bounded context. This medium granularity exists in the continuum between coarse-grained (e.g. n-tier system) and fine-grained components (e.g. microservices).

Elasticity (+3)

Decomposed components are generally lighter-weight and offer reduced start times eliciting improved elasticity compared to monolithic systems or coarse-grained components.

Evolvability (+3.5)

Decomposition into components enables different parts of the system to evolve at different rates. Adding or changing functionality may often be scoped to a single component.

Fault-tolerance (+3.5)

Independent components of any granularity begin to reduce the operational cost of running any given component. It becomes feasible to run multiple instances of a component which reduces the risk of system-wide failures when any individual instance of a component fails. Furthermore, components generally represent only a subset of the overall system functionality which supports implementation decisions that enable the majority of the system to continue functioning.

Deployability (+3)

A system decomposed into compents paves the way for smaller-scoped deployments and partial deployments of the system. Deployments scoped to components, reduces deployment risk and simplifies pipelines.

Scalability (+3)

Smaller components may be individually scaled increasing total available compute where it is most needed.

Testability (+3)

Breaking an application into smaller components reduces the total testing scope and fine-grained components are usually split at the bounded context reducing inter-component testing scope.

Agility (+1.5)

Decomposing a system into independent components of any granularity with thoughtful module boundaries has the potential to improve business agility. Components may be independently evolved and deployed with reduced overall testing scope.

Configurability (+0.5)

Decomposing a system allows components to be individually configured and each component may utilize different framework versions, runtimes, languages, or platforms.

Integration (-2)

Fine-grained components are generally managed by separate teams. More coordinate is required, increasing coordination costs.

Interoperability (-2)

Fine-grained components are usually scoped to the bounded-context, this introduces interoperability challenges when communication spans domain boundaries and ubiquitious languages differ.

Performance (-2)

Fine-grained components generally require more network calls as many common functions increasing potential latency and degrading performance.

Simplicity (-2)

This constraint requires significant investment in infrastructure and pipeline development and typically requires more complex cloud implementation.

Constraint: High Operational Automation

This constraint dictates that the architecture exhibit a high degree of operational automation.

Elasticity (+3)

High operational automation enables more robust and responsive automated scaling of resources up or down as needed.

Performance (+3)

The ease of elasticity improves overall performance as needed, compensating for network overhead in some cases.

Configurability (+2)

A consequence of investment into operational automation is configuration management as part of the deployment/operational toolchain.

Evolvability (+2)

The induced agility and deployability improves overall system evolvability.

Agility (+1.5)

High operational automation correlates with high maturity and automation elsewhere in the build/deployment pipeline. High operational automation also allows for lower risk deployments due to deployment management strategies it enables.

Deployability (+1.5)

High operational automation also allows for lower risk deployments due to deployment management strategies it enables.

Fault-tolerance (+1)

A system with high operational automation provides more scope for managing and mitigating partial failures and recovery.

Testability (+1)

High operational automation correlates with high maturity and automation elsewhere in the build/deployment pipeline which improves system testability.

Scalability (+0.5)

High operational automation offers some capability to scale-up resources on demand.

Cost (-1)

This constraint introduces increased operational cost, development investment, and potential licensing costs for operational middleware.

Simplicity (-1)

This constraint requires significant investment in infrastructure and pipeline development and typically requires more complex cloud implementation.

Constraint: All Data Stored in-memory

This constraint dictates that components store all data in-memory.

Performance (+5)

This constraint eliminates both network I/O and database I/O thus massively improving performance.

Scalability (+5)

By removing the database and external I/O as scalability bottlenecks, scalability becomes effectively infinite.

Deployability (-1)

This constraint reduces deployability as deployments force a system-wide reload of data.

Configurability (-2)

This constraint tends to reduce configurability as data structures are typically fixed.

Fault-tolerance (-1.5)

This constraint introduces new failure conditions that can be difficult to manage. Crashes may result in lost state.

Cost (-3.5)

This constraint introduces high-resource utilization and increased baseline resource requirements. This also typically results in more overall compute resources necessary to run the system at all times.

Testability (-4)

This constraint significantly weakens testability when part of a distributed architecture. Although core logic may be easy to test, various failure conditions such as data collisions and race conditions are extremely difficult to test for at design/build time.

Constraint: Replicated Shared Data Grid

This constraint prescribes the use of a replicated/shared data grid common in space-based architectures, emphasizing data consistency and availability by maintaining multiple replicas of the data. The data grid handles shared state and replication helping ensure that even if one node fails, another can take its place without any data loss, enabling high availability and fault tolerance.

Integration (+3)

This constraint requires well-defined data schemas and integration contracts, improving integration.

Interoperability (+3)

This constraint requires well-defined data schemas and integration contracts, improving overall component interoperability.

Deployability (+0.5)

The replicated, shared data grid operates independantly of any running instances. This improves deployability in some situations supporting incremental deployments rather than full shutdowns and cold starts.

Evolvability (+1)

Evolvability is improved as the shared data grid infrastructure may be extended to additional tuple spaces as well as supporting partial deployments to reduce change costs.

Fault-tolerance (-1)

This constraint introduces new potential failure conditions including, replication errors, collisions, and race conditions that may be difficult to contain and mitigate.

Cost (-3)

The operational costs and licensing costs of products that implement this data grid can be quite high.

Summary

This abstract style offers some unique advantages in terms of linear scalability and high elasticity as well as significant overhead and challenges in terms of managing synchronization.