Insights, Shallow-Work, and the Next Correction

2020 was a rough year. Much of my income had come from live performances as a magician, live events as a speaker and trainer, and in-person consulting gigs. By March of 2020, every live event was being dubbed a “super spreader” event, markets were cooling, and enterprises were preparing to “batten down the hatches” in anticipation of unknown societal and financial disruption. My livelihood was in grave jeopardy. My wife and I tightened our belts hoping to ride out the storm but, by the summer, it became clear that this particular storm had an indefinite duration. I eventually accepted a job as a principal software architect at an intriguing startup within the enterprise and quickly rose through the ranks to become their Chief Architect.

As my responsibilities grew, I realized it would be crucial to scale myself and my contributions, so my focus turned to hiring and I had never seen a market like it. Salary expectations had inflated 100%+ since I was in the market. Signing bonuses were being offered at a scale I had never seen before. As it became harder and harder to attract talent, compensation only continued to increase. I won’t lie, I was a little bitter and a little envious. My direct reports I hired were earning substantially more than I. I expressed these feelings to a colleague who asked, “Have you considered looking, yourself?” My response was “It crossed my mind, but I think it would be a bad idea. ‘Those who live by the sword, die by the sword.’” In other words, the tech market looked an awful lot like a bubble. I didn’t know when it would burst, but I knew it would. Companies were responding to the market changes by “warchesting” talent; proactively hiring for roles that didn’t even exist yet. 18 months later came some of the most brutal layoffs I’ve seen since the 2000-2001 era and almost all of those who found themselves swept up in the cuts were downsized through no fault of their own.

I believe another correction is imminent, but this might be a good thing, depending on how we navigate it.

(It turns out it was definitely a bubble)

(Don’t Fear) the Reaper

Since ChatGPT rocketed the capabilities of Large Language Models (LLMs) into the collective consciousness in late 2022, there has been much handwringing about the future prospects for various skilled trades–including software development. My first time seeing an IDE generate a method based on nothing but a name was an eye-opening experience. I immediately heard echoes of my secondary-school girlfriend warning me that there was no future as a programmer because “eventually computers will be able to program themselves.” Oh, how I laughed at that idea at the time! And now, like the Luddites of the 19th century, we fear that the years spent learning and mastering our craft will soon be for naught.

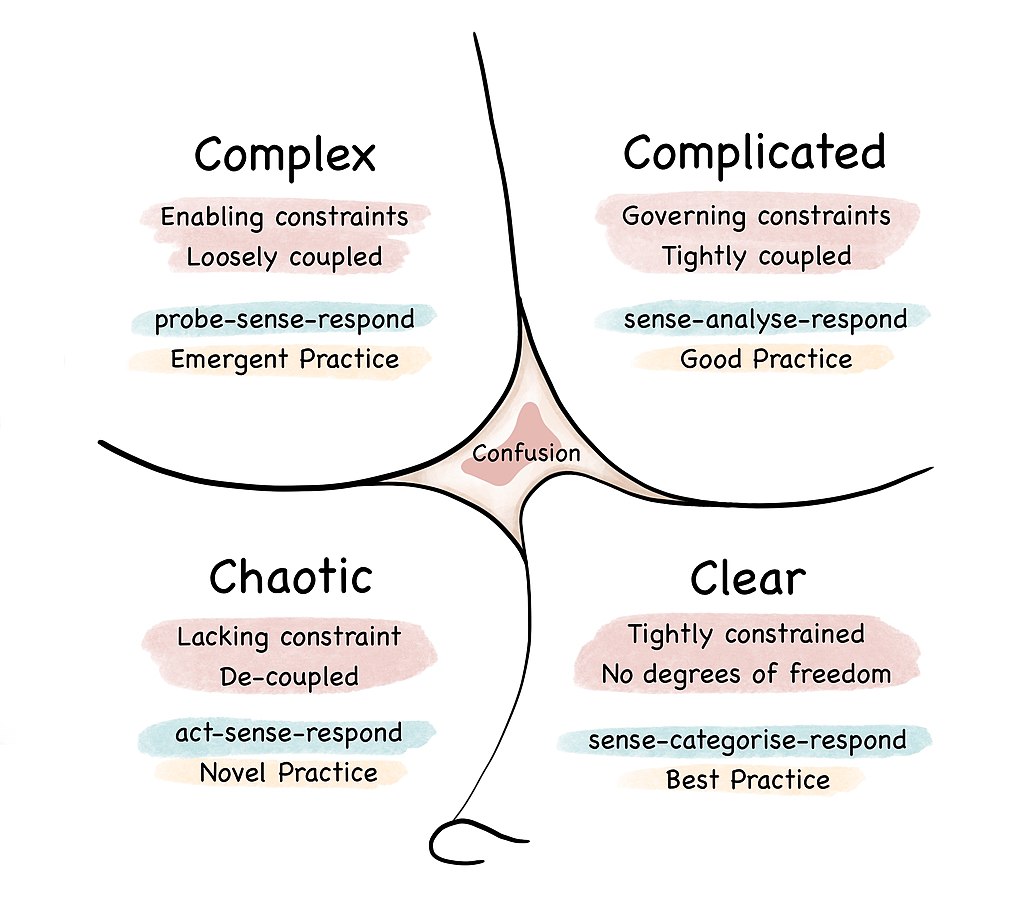

On the other hand, there is a cohort of people (spanning many industries) gleefully boasting on social media that “I’ve been able to automate 90% of my job using ChatGPT!” Fundamentally, I believe the value proposition of knowledge work is beginning to change (and a job that can be almost entirely automated is the real wake-up call). For me, any task that can be easily automated is a strong signal that the task is not uniquely valuable anymore. I don’t look at the output of ChatGPT and say “Oh, wow, look how powerful this model is!” Instead, I say “Oh, I guess this task isn’t all that complex after all.” That’s not intended to be dismissive of the work of OpenAI and others; it is still undeniably impressive to use natural language prompts to automate tasks that historically required semi-skilled humans however, if the current crop of large language models can consistently and repeatedly automate a task, it can be boiled down to well-defined rules and best-practices. In other words, the task resides in the “clear” Cynefin domain.

In my last piece, I wrote about the Cynefin Framework. Below is a visualization of the four main domains.

If most of what we do today exists in the “Clear” domain, we must shift our focus to the “Complicated” and “Complex” domain to avoid getting disrupted. Many of us have longed to spend more time in these more interesting and challenging domains, but so much of our workload required us to spend a great deal of time in the “Clear” domain as a means to an end (leaving little time for the more interesting/challenging work). This may be the next disruption.

Throughout the history of technology, change has been constant. In almost every case these changes have simply freed us to focus on harder and harder problems. Less than a century ago, “Computer” was not a machine, it was an occupation; until a new technology arrived and disrupted that career. Some were disrupted, others moved up the value chain.

What does it mean to “move up the value chain?” Tools like ChatGPT give us a useful metric. If a category of work can be easily and reliably automated by a LLM (or any technology), it’s not that valuable anymore. Let the language models handle the work they are good at and focus your time and attention on the work insightful, thinking humans are good at. We need to focus on the work that requires higher levels of cognitive ability, critical thinking, nuance, problem-solving skills, and domain-specific expertise that can’t yet be easily duplicated by language models.

The Death of Shallow Work?

In my career as a speaker, trainer, and coach; I spend a lot of time discussing tools and strategies to stay focused and productive in the distracting and interrupt-driven environments we find ourselves working in. Much of this stems from developing my own coping skills and strategies as a neurodivergent individual with a fairly severe ADD diagnosis, as well as the books I have read on the subject. One of the most influential books, for me, was Cal Newport’s Deep Work.

Newport defines “Deep Work” as follows:

“Professional activities performed in a state of distraction-free concentration that push your cognitive capabilities to their limit. These efforts create new value, improve your skill, and are hard to replicate.”

He contrasts this with “Shallow Work”:

“Non-cognitively demanding, logistical-style tasks, often performed while distracted. These efforts tend not to create much new value in the world and are easy to replicate.”

In the book, Newport talks about Deep Work, why it’s valuable, and why it’s so difficult to find time for deep work. He offers strategies to carve out time to commit to deep work. Overall, it was a very inspiring read, although it may soon become obsolete. Much of the “Shallow Work” of the past consisted of things like:

- Squandering our time, energy, and creativity composing emails to answer general inquiries.

- Writing status updates

- Writing endless amounts of boilerplate code

- Searching for reminders on how to perform routine coding tasks

- Writing or updating documentation

- Time tracking and reporting

These have always been “necessary evils” in our field, but with tools like large language models, these can be automated to varying degrees, radically reducing our load of “Shallow Work” For example:

- Responding to emails: Language Models can draft email responses based on the content and context of the received email. You still need human input for more nuanced or context-specific replies, but typically you can get a 90% done draft out of the gate.

- Updating project management tools: While LLMs could help draft task descriptions or update progress, integrating it with project management tools and ensuring accurate input would require some human intervention.

- ChatGPT and CoPilot are very good at writing boilerplate code. This has been a productivity game-changer for me. Likewise with getting correct syntax for various languages and libraries.

- Writing or updating documentation: LLMs could generate initial drafts of documentation or update existing ones based on provided guidelines or templates. Human review would still be necessary to ensure accuracy and clarity. (Also, be very careful giving your source code and design docs to a model that trains on user input!)

- Time tracking and reporting: ChatGPT could draft reports based on logged hours or progress data, but the actual time tracking would still require input from the software engineer.

Perhaps we no longer need to cloister ourselves away for weeks and months on end to pursue the “Monastic Deep Work Philosophy” or snatch 15 minutes of focus here and 30 minutes there to pursue the “Journalist Deep Work Philosophy” we can simply outsource shallow work to language models and spend larger and larger amounts of time focused on Deep Work. Deep Work is much more satisfying and it is where the real value is.

Insight Thinking

Language models are trained on a large corpus of text. Solved problems, best practices, well-defined solutions; language models excel at these tasks. These have always been “easy” to replicate, but now these can be replicated almost for free. Emergent capabilities of language models often involve the detection of subtle patterns that may not be obvious to us with our, much smaller, training set. When it comes to emergent capabilities of AI, the consensus seems to be that “these AI models are more powerful than we thought” but I would argue that perhaps it simply shows that certain problems are actually easier than we thought.

What we can’t seem to automate is insight thinking.

Insight thinking is the “aha” or “Eureka” moments; the sudden understanding or realization of a solution to a problem or a deeper understanding of a concept. It involves a mental restructuring of the information at hand, which results in a new perspective or a novel solution. Insight thinking is a type of creative problem-solving that often seems to come out of nowhere. It’s why range and breadth can often triumph over specialization. Insight thinking requires a breadth of knowledge that is difficult to develop when most of your time is spent on shallow-work.

With new technologies that can competently operate in the clear domain, we can free up our time to learn, to grow, to create, and to think. This is how we navigate the next disruption. We can spend our time growing and thinking, or we can spend our time bragging on social media about how our jobs can be automated (and then lament on social media that our jobs have been automated.)

Don’t Outsource Thinking

One word of caution. Use these tools wisely and be mindful of when to–and when not to–automate. For example, I can easily give ChatGPT an article and ask it to summarize the text for my notes. While this saves time, it robs me of the mental distillation of my thoughts (part of the learning process). As a knowledge worker who wishes to remain competitive for another couple of decades, thinking and learning are the most prized things I can do with my time.

In short, knowledge workers should leverage AI tools to enhance our productivity and creativity, but not at the expense of our critical thinking and learning abilities.