The Rise and Fall of Software Architecture

I’m currently in Budapest, HU speaking at and attending a conference. Although the attendees are smart and passionate, the speakers have many valuable things to say, I see the continuation of an alarming trend in the tech industry: The sessions that promise simple answers are wildly popular while the sessions that offer insights on how to improve our thinking and mental models are are relatively sparsely attended. This culture of “don’t make me think, just give me the answers” is crippling our industry.

Ask your average nerd “what is the answer to life, the universe, and everything?” and you’ll receive a prompt and definitive answer of “42.” This was once a shibboleth to distinguish fans of Douglas Adams, but today it is just part of the nerd culture lexicon. It was once a joke, poking fun at the pop-philosophers who were more interested in answers than questions (and, in fact, that the answer without the question is meaningless) but that underlying attitude is now also firmly cemented into our engineering culture.

I want to be clear, I don’t see this as some kind of moral failure. Often the underlying motivations come from a genuine and sincere place. We want to produce the best work. We have learned that “bad” design leads to undesirable outcomes and “good” design leads to desirable outcomes. Good, however, is relative and extremely subjective.

We are all under near-constant pressure to deliver faster and faster. Our industry is changing and evolving at a mind-boggling pace. It takes nearly all of our time and mental energy just to try to keep up. It is no wonder there is an insatiable thirst for answers. Unfortunately, sometimes we must first slow down in order to accelerate.

The Rise of Software Architecture

In 1975, Frank DeRemer and Hans Kron wrote Programming in the small vs Programming the Large which is arguably one of the explorations of the field that would ultimately become “Software Architecture.” As software grew in scale and complexity, it was clear we would be facing new challenges that need addressing. Around a decade later Fred Brooks wrote No Silver Bullet—Essence and Accident in Software Engineering.

Of all the monsters who fill the nightmares of our folklore, none terrify more than werewolves, because they transform unexpectedly from the familiar into horrors. For these, one seeks bullets of silver that can magically lay them to rest.

The familiar software project has something of this character (at least as seen by the non-technical manager), usually innocent and straightforward, but capable of becoming a monster of missed schedules, blown budgets, and flawed products. So we hear desperate cries for a silver bullet, something to make software costs drop as rapidly as computer hardware costs do.

But, as we look to the horizon of a decade hence, we see no silver bullet. There is no single development, in either technology or management technique, which by itself promises even one order of magnitude improvement in productivity, in reliability, in simplicity. In this paper we shall try to see why, by examining both the nature of the software problem and the properties of the bullets proposed.

-Fred Brooks - No Silver Bullets

In the decades since Fred Brooks uttered these immortal words, his observation continues to prove true. All we have discovered is new sources of essential complexity (as well as more accidental complexity every time we perceive the solution to one organization or domain’s essential complexity a coveted “silver bullet” that will solve the pain for every organization’s essential complexity).

Architecture as a Formal Discipline

As software development stumbled through it’s first few decades of infancy, we began to better understand that different approaches to organizing code and modules would produce different results. It wasn’t enough to produce functional code, or merely correct code. Someone–or several someones–needed to be thinking about the big picture and how to design and assemble these increasingly complex software systems. By the late 1980s, the idea of software architecture entered our industry lexicon and by 1990 the first book on software architecture was published.

Over the next two decades the world changed dramatically. As we pushed software to do more for increasingly larger userbases, architectural thinking provided many answers and propelled us into a 21st century world, powered by software. We’ve learned a lot, we’ve accomplished a lot, and we are better for it.

The Fall of Software Architecture

From Diffusion to Dilution

I remember, with great fondness, those heady early days of the web. Tech companies grappled with the needs of unprecedented scale and demand. As the established patterns and approaches to building software failed to deliver crucial architecture -illities that a specific product or organization needed, it was through a great deal of trial, error, study, experimentation, and iteration that novel solutions were developed.

Of course, these engineers were eager to share what they had learned. How they had slain their particular species of werewolf. These ideas began to diffuse from a first person recount of the problem and solution to third person summaries (i.e. “this is how they solved this particular problem”). Like a distributed game of blog and conference telephone, the message changed. Ultimately it has become “This is what google/netflix/amazon/etc is doing. This is the new best practice - this is the silver bullet.”

The Decreasing Half-Life of Best Practices

I taught myself to program in the late 1980s. My first computer was not capable of multitasking. It did not have a hard drive. Running a program involved placing the program’s disk in the drive and turning the machine on. If I wanted to run a different program, I would have to swap disks and reboot the computer. That utility of that machine was limited to what disks I had available; without those disks, the computer would do nothing other than flash “check startup device” on the screen.

I realize this is beginning to sound like an old-man rant back in my day we had to walk to school in the snow and it was uphill both ways but you have to understand the power I discovered with that little BASIC interpreter that was baked into the machine’s ROM. By learning to program, I unlocked a power to transform my little Apple ][ from a machine that could do very little into a machine that could do anything I cared to imagine. Through the power of brute-force and ignorance, I made that computer do many things.

My code was not elegant, it was barely functional… but just as Miracle Max once explained “There’s a big difference between mostly dead and all dead. Mostly dead is slightly alive.”, barely functional is still functional. The code did what I wanted it to.

During my formative years as a developer that followed, I had many wise and knowledgeable teachers. They taught me that it wasn’t enough to write (barely) functional code, I had to write robust code. I had to write maintainable code. I had to write understandable code I had to write secure code. There may be “more than one way to do it” but all approaches were not created equal. I learned several “best practices” from these teachers and mentors.

My professional career in tech now spans three decades and, while some of the best practices I learned back in the 1900s still have value, many do not. As the scope and scale of what we can do with software has exploded, best practices have become more contextual. Some of the best practices I was once taught do not apply at all.

What has been interesting to observe over the past 20 years is best practices no longer age like wine, they age like milk. We prescribe or parrot best practices as though we are all trying to accomplish the same things with software, with the same teams, with the same level of technical sophistication. We’re not. So many best practices focus on tech stacks and tooling. The same is true in architecture.

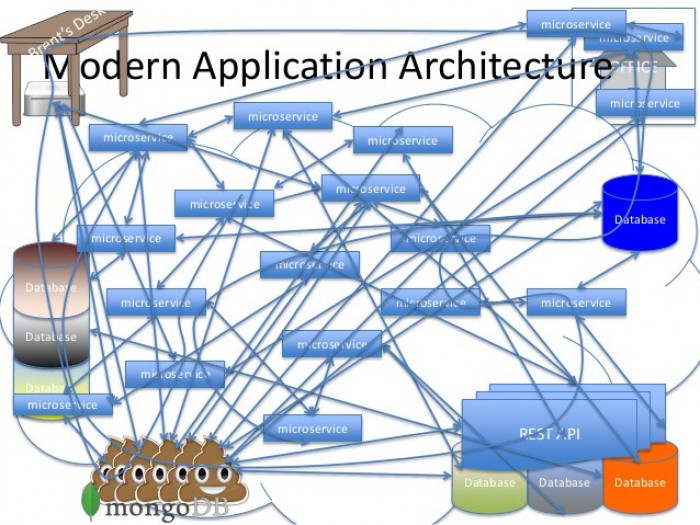

In 2014, Martin Fowler wrote a famous blog post on the microservices architecture, all but proclaiming it to be a new ‘best practice.’

“We’ve seen many projects use this style in the last few years, and results so far have been positive, so much so that for many of our colleagues this is becoming the default style for building enterprise applications.”

Less than a year later he published a complete 180.

“As I hear stories about teams using a microservices architecture, I’ve noticed a common pattern… Almost all the cases where I’ve heard of a system that was built as a microservice system from scratch, it has ended up in serious trouble…

This pattern has led many of my colleagues to argue that you shouldn’t start a new project with microservices, even if you’re sure your application will be big enough to make it worthwhile.

We want to be better engineers, but our propensity to chase illusive silver bullets only results in accidental complexity.

Complexity is Everywhere

Despite the fact that in software architecture, there are not best practices (only trade-offs), we want to give our objective best except there is no objective best, only contextually and subjectively optimal. This is why I was angry enough to write this post.

The session whose title and author I won’t mention followed a common fallacy in technology; that newer is better and that any problem can be solved if you throw enough code at it. The speaker told the story of a handful of ultra-successful web startups that faced massive challenges scaling to meet growing demands, distilled a handful of lessons, and preached the fallacious gospel of overengineering.

You don’t have to think, just follow these ‘best practices’ and you can save yourselves the same problems that these case-study companies faced. This is a microcosm of the broader problem in the tech industry. “Strategy” has gone from being a practice of deliberate thought and planning to blindly aping what everyone else is doing (which usually involves copying the technical without bothering to understand the non-technical components that make such approaches possible and blindly assuming that all problems are the same).

I once had a client who had a mandate from the top to “build everything as microservices.” On many occasions I have had to explain that successful IT organizations who adopt microservices do so as a consequence of their success, not as a cause. By the same token, driving an F1 car won’t make you a racecar driver, you have to be a racecar driver to be able to drive an F1 car.

Furthermore, even putting the best driver behind the wheel of an F1 car will still result in an unbroken string of expensive and spectacular losses without a pit crew operating with laser precision and flawless process.

And all of this is a waste of time and money if the speed limit is 75.

So, adopting microservices with the hope it will allow us to emulate more successful IT organizations is roughly akin to me buying a Ferrari with the hopes it will make me a millionaire like my friend who drives a Ferrari. I also have to deal with the reality that he lives in Dubai and I live in Denver. No matter how cool the car is, I’m still going to get stuck in the snow in a way that he never will.

Chasing silver bullets is a recipe for crushing accidental complexity. Not understanding the broader social and organizational dynamics that enable certain architecture approaches is a recipe for failure.

Complexity has to go somewhere, but it doesn’t have to go everywhere. Overengineering architecture is not a substitute for architectural thinking.

The Rebirth of Software Architecture

As I watched that standing-room-only crowd in the largest space at the conference lap up the “easy answers” originating from the stage, I became worried for the future of software and architecture. There is far more demand (and more money to be made) in simply telling people what to think, but this is not sustainable. What we in our industry need is more thought-leaders who teach us how to think.

What I have seen in the Generative AI space since 2021 is that we have models that are so convincing in their cleverness that they fool us into delegating our thinking to them while they vomit up the most statistically plausible response to our prompt. Our skills surrounding critical thinking are atrophying just as we’re beginning to need them most. Our industry needs more thinking and less typing but AI is only managing to give us more typing with less thinking.

We must return to the core skill of architectural thinking and develop that muscle. We need architecture models that offer more than the status quo is giving us. The software, the components, the organization, the teams, the environment, the economy, politics, our users–are complex, nuanced, and they are all connected. We need a return to holism in software architecture.

I have spent the past few years attempting to develop a new model of software architecture that unifies the fragmented models and centers around architectural thinking. I have spent the past 12 months distilling this into a comprehensive book. This has been a daunting–and at times overwhelming–task but I firmly believe we can all be better today than we were yesterday, and our field of software architecture can be better tomorrow that it is today. I suspect you believe this, too.

“Of all the various engineering disciplines that have emerged over the course of human history, software is arguably the youngest by a substantial margin. Civil, mechanical, and military engineering evolved over millennia. Chemical and electrical engineering span centuries. In contrast, software engineering has only been around for a handful of decades. We still have much to learn and discover.”

Michael Carducci (Mastering Software Architecture, Apress)